Light field technology for film production

From 2014 to 2015 we realised a proof of concept research project with light filed technology. The aim of the project was to implement a light field array into a professional film production, to develop and test efficient workflows and to discuss aesthetical, technical and economical aspects of various use cases.

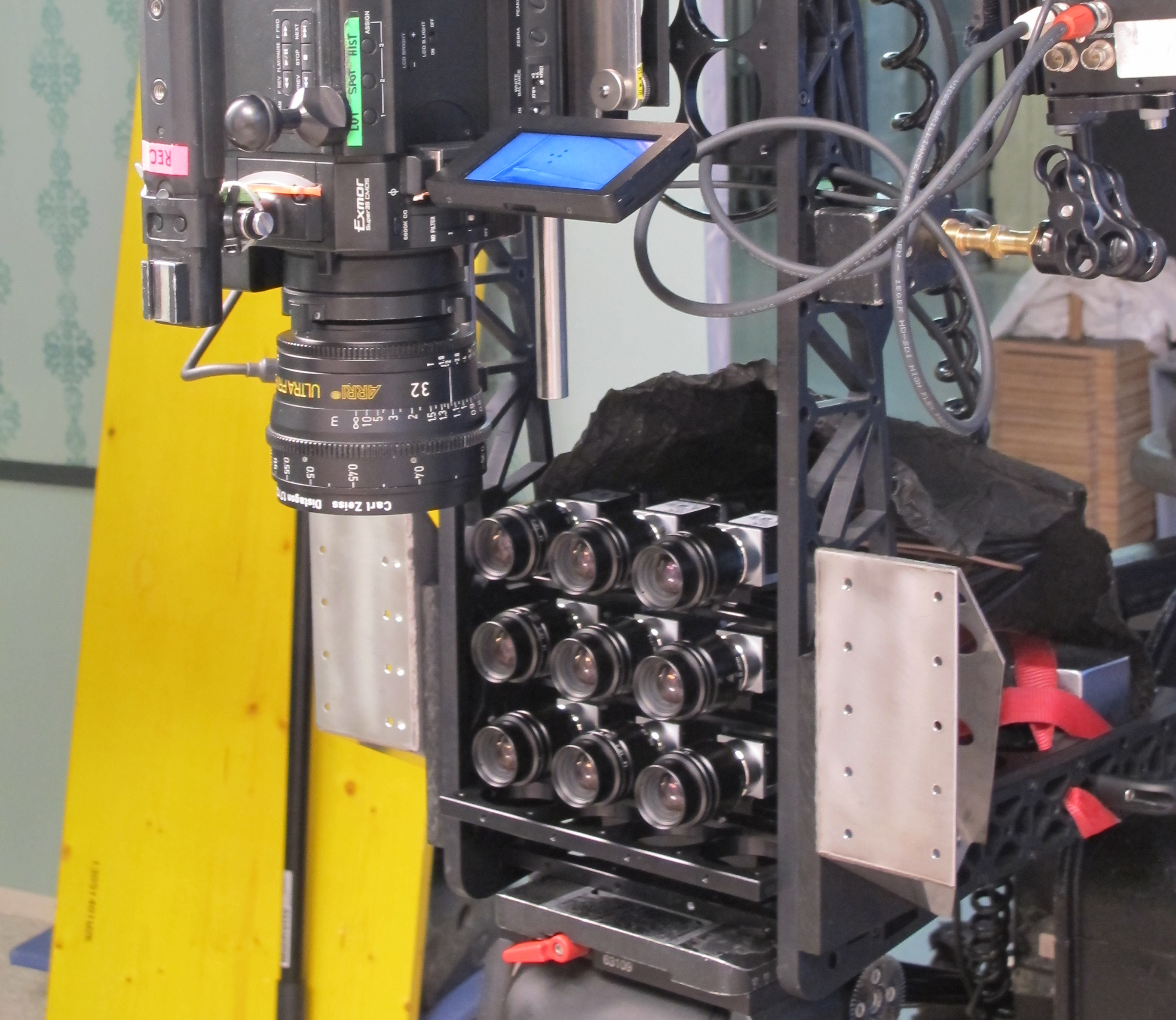

The Stuttgart Media University cooperated with the Frauenhofer IIS at Erlangen which has developed a nine cameras-prototype array. It provided data on illuminance, direction of light and dispersion appropriate to light field technology. Based on this data depth maps and normals maps icould be rendered with computational photography algorithms. Apply these data an enormous extension of aesthetical opportunites and new fields of applications could be achieved e.g.: rendering of virtual focal planes and depth of field, as well as stereo views, depth maps, normal maps, autostereoscopy and super-resolution.

The research project light field videography includes: Improvement of the algorithms used for computing dense fields, programming of plugins for the compositing software NUKE, workflow optimization for various use cases in film productions.

The research group at HdM creates visual concepts for adequate scenes and realizes them in a professional studio environment. The tended goal of producing a light field short movie was to proof that light field technology can be used in a professional movie production environment using adapted workflows.

In addition the resulting short film „Coming Home“ features light field scenes with various applications, such as virtual focus, view rendering, depth based grading and depth based matte extractios. The short film was presented at NAB 2015 in Las Vegas. The project was completed with the publication of the paper "Multi-camera system for depth based visual effects and compositing" presented at CVMP in 2015.

Links:

https://vimeo.com/130973153

https://vimeo.com/130983212

Multi-camera system for depth based visual effects and compositing

Abstract of CVMP Paper 2015

Post-production technologies like visual effects (VFX) or digital grading are essential for visual storytelling in today’s movie production. Tremendous efforts are made to seamlessly integrate computer generated (CG) elements into live action plates, to refine looks and to provide various delivery formats such as Stereo 3D (S3D). Thus, additional tools to assist and improve element integration, grading and S3D techniques could ease complicated, time consuming, manual and costly processes. Geometric data like depth information is a key for these tasks but a typical main unit camera shoot does not deliver this information. Although e.g. light detection and ranging (LIDAR) scans are used to capture 3D set information, frame by frame geometric information for dynamic scenes including moving camera, actors and props are not being recorded. Stereo camera systems deliver additional data for depth map generation but accuracy is limited. This work suggests a method of capturing light field data within a regular live action shoot. We compute geometric data for postproduction use cases such as relighting, depth-based compositing, 3D integration, virtual camera, digital focus as well as virtual backlots. Thus, the 2D high quality life action plate shot by the Director of Photography (DoP) comes along with image data from the light field array that can be further processed and utilized. As a proof-of-concept we produced a fictitious commercial using a main camera and a multi-camera array fitted into an S3D mirror rig. For this reference movie, the image of the center array cam has been selected for further image manipulation. The quality of the depth information is evaluated on simulated data and live action footage. Our experiments show that quality of depth maps depends on array geometry and scene setup.